BERT-Large: Prune Once for DistilBERT Inference Performance

4.6 (534) In stock

4.6 (534) In stock

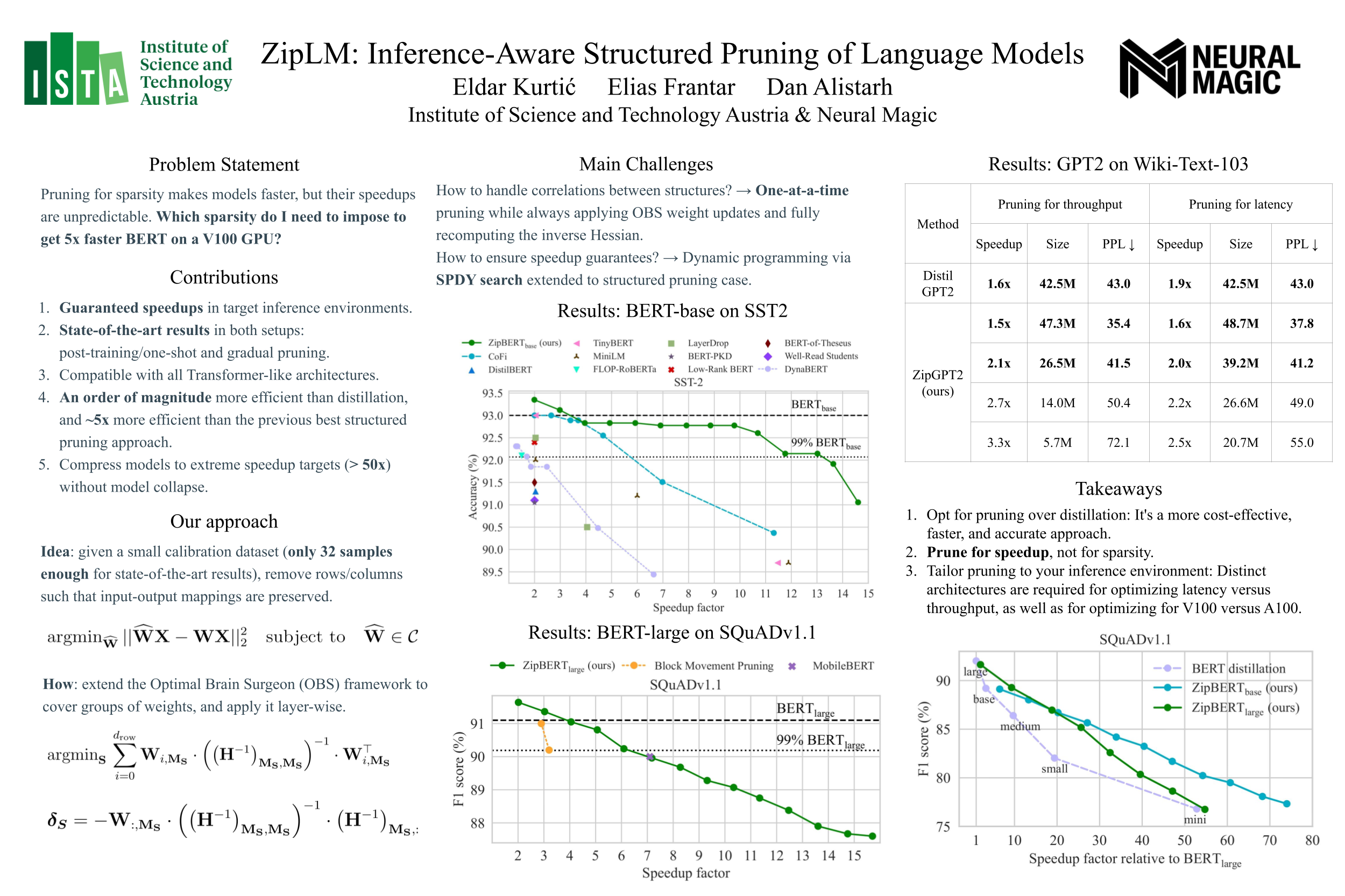

Compress BERT-Large with pruning & quantization to create a version that maintains accuracy while beating baseline DistilBERT performance & compression metrics.

BERT-Large: Prune Once for DistilBERT Inference Performance - Neural Magic

The inference process of FastBERT, where the number of executed layers

How to Achieve a 9ms Inference Time for Transformer Models

NeurIPS 2023

Excluding Nodes Bug In · Issue #966 · Xilinx/Vitis-AI ·, 57% OFF

Excluding Nodes Bug In · Issue #966 · Xilinx/Vitis-AI ·, 57% OFF

Large Transformer Model Inference Optimization

Efficient BERT with Multimetric Optimization, part 2

Dipankar Das posted on LinkedIn